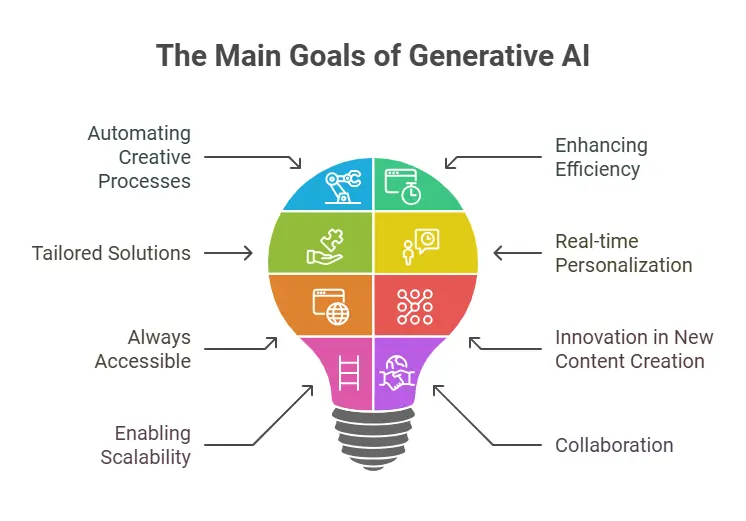

Generative AI has emerged as one of the most transformative technological advancements of the 21st century. It can create content, generate designs, simulate conversations, and even craft entire articles, such as this one, based on minimal input. While these capabilities offer enormous potential in fields like entertainment, marketing, design, and education, they also raise significant ethical concerns. As we continue to integrate generative AI into various industries, it’s crucial to recognize the moral responsibilities that accompany these technologies. This blog explores some of the key ethical considerations when using generative AI.

Bias and Fairness

One of the most pressing ethical concerns with generative AI is the issue of bias. AI systems are trained on large datasets that are often sourced from human-created content. These datasets may inadvertently contain biases, such as racial, gender, or cultural stereotypes. When a generative AI system is trained on such data, it can perpetuate and even amplify these biases in its outputs.

For example, an AI tool designed to generate job application letters might produce outputs that favor one gender over another or disproportionately represent certain ethnic groups. This could lead to discriminatory practices, especially in automated hiring systems, where AI is increasingly used to screen candidates.

What can be done?

To mitigate bias in generative AI systems, it’s important to use diverse and representative training data. Additionally, AI developers should regularly audit their models to check for discriminatory outcomes and implement strategies to reduce bias. Ethical AI development requires that fairness is prioritized at every stage, from data collection to model deployment.

Copyright and Intellectual Property

Generative AI has raised new questions around copyright and intellectual property. When an AI generates content—whether it’s an artwork, a song, or a piece of writing—it may do so by mimicking patterns from existing works. This raises concerns about the ownership of the generated content. Who owns the rights to AI-generated art? Is it the person who created the AI, the one who prompted the AI, or the AI itself? These are questions that legal systems are still grappling with.

Furthermore, generative AI can be used to replicate the work of famous creators, leading to potential issues of infringement. Imagine an AI tool generating a piece of music that sounds remarkably like a popular song, or an AI creating art that closely resembles the style of a renowned painter. While AI may technically create these works independently, the influence of existing works on AI’s outputs can blur the lines between inspiration and infringement.

What can be done?

Clear guidelines must be developed around the ownership and licensing of AI-generated content. It’s essential for AI developers, creators, and lawmakers to work together to establish laws that protect intellectual property while fostering creativity. Additionally, users should be transparent about the AI tools they use and the potential sources from which the AI draws inspiration.

Transparency and Accountability

Another ethical concern with generative AI is transparency. Many AI systems operate as “black boxes,” meaning that their internal workings are not easily understandable by humans. When AI produces content, it can be challenging to trace how it arrived at a particular result or to explain why the AI made a specific decision. This lack of transparency raises concerns about accountability, especially when AI is used in critical areas such as healthcare, finance, and law.

For example, if a generative AI tool is used to recommend medical treatments, and the recommendation turns out to be harmful, who is responsible for the consequences? Is it the developer who created the AI, the company that deployed it, or the user who followed the AI’s suggestions?

What can be done?

Developers must prioritize transparency in their AI models. This can involve building explainable AI (XAI) systems that allow users to understand how and why certain outputs are generated. Additionally, ethical frameworks should be put in place to ensure that AI systems are held accountable for their actions, with mechanisms to correct or address harm caused by these systems.

Misinformation and Manipulation

Generative AI can be used to create convincing but false content, such as deepfakes—realistic videos or images that can make it appear as though someone said or did something they did not. This capability can be used for malicious purposes, such as spreading misinformation, defaming individuals, or even influencing political elections.

The ability to generate realistic fake news articles or manipulate audio and video content to mislead the public is a powerful tool that could be abused. The consequences of such actions are profound, as they can erode trust in media, destabilize societies, and cause personal harm.

What can be done?

To address these risks, AI developers and platform providers must implement safeguards to detect and prevent the spread of harmful content. This includes developing tools to identify deepfakes, promoting digital literacy to help users identify misinformation, and creating guidelines around the ethical use of generative AI. Media organizations must also work together to establish ethical standards that ensure the responsible use of AI.

Human Creativity and Job Displacement

Generative AI’s ability to produce creative works and automate tasks raises concerns about the future of human labor and creativity. While AI can assist in creative processes, it also has the potential to displace jobs, particularly in industries like journalism, design, music, and writing. For instance, AI-generated art and writing may replace human artists and writers, leading to concerns about job loss and reduced opportunities for creative professionals.

At the same time, there is a risk that AI could lead to a homogenization of creativity. As AI systems are trained on existing works, they may produce content that lacks originality or the unique touch that human creators bring to their work.

What can be done?

It’s essential to recognize that AI is a tool to augment human creativity, not replace it. While AI can handle repetitive tasks or assist with brainstorming, it is human intuition, emotion, and experience that provide depth to creative work. In this context, workers should be reskilled and upskilled to work alongside AI, learning how to harness its capabilities rather than be displaced by them.

Privacy and Security

Generative AI systems require vast amounts of data to function effectively, which often includes personal data. This raises concerns about privacy and data security, especially when AI systems are used to create content based on sensitive information.

For instance, AI-powered chatbots and virtual assistants could potentially generate responses based on personal conversations or private details, raising fears about how data is stored, shared, and used.

What can be done?

To address privacy concerns, strict data protection measures should be implemented in the development and deployment of AI systems. This includes ensuring that AI systems comply with data protection laws like GDPR (General Data Protection Regulation) and that users have control over their data. Additionally, AI systems should be designed with privacy by design, ensuring that user information is kept secure and confidential.

Conclusion

Generative AI holds tremendous promise, but with great power comes great responsibility. As AI continues to evolve and become a part of everyday life, it is essential to address the ethical considerations surrounding its use. Developers, users, and policymakers must collaborate to ensure that generative AI is deployed in ways that promote fairness, transparency, accountability, and respect for human rights. By doing so, we can harness the power of AI to create a future that is both innovative and ethically responsible.