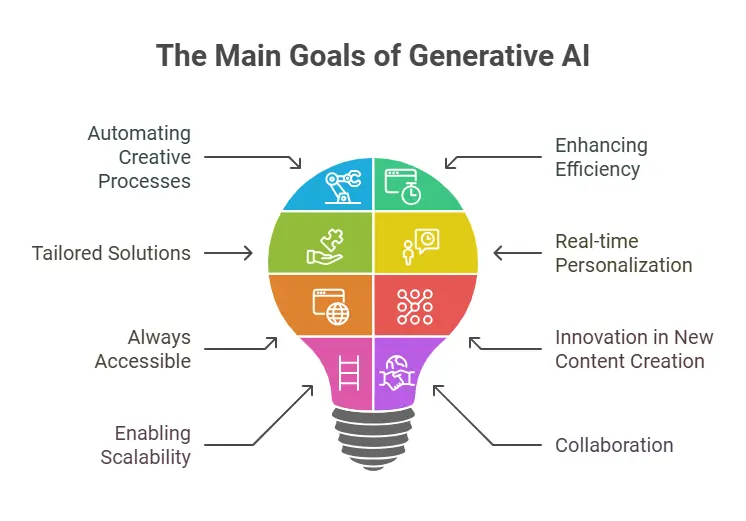

Generative AI has become one of the most powerful and exciting technological advancements in recent years. From AI-generated art to writing, music composition, and even deepfakes, these systems are capable of producing impressive results that are indistinguishable from human-generated content. However, as these systems evolve, the importance of controlling their output grows even more critical. While they offer tremendous opportunities, there are significant risks associated with their unchecked output. In this blog, we will delve into why controlling the output of generative AI systems is crucial for ethical, legal, societal, and technological reasons.

Ethical Considerations: Preventing Harmful Content

The most pressing reason for controlling the output of generative AI systems is the potential for harm. Without proper safeguards, AI models can generate content that is offensive, discriminatory, misleading, or even harmful. For example, generative AI can produce hate speech, violent imagery, or biased narratives. These outputs could perpetuate stereotypes, spread misinformation, or harm vulnerable communities.

AI systems often learn from vast datasets that include content created by humans. However, these datasets can contain biases, prejudices, and harmful ideologies. If the AI is not sufficiently monitored and controlled, it may replicate or even amplify these biases. For instance, an AI trained on biased data could generate texts that reinforce gender stereotypes or create images that perpetuate racial biases.

By carefully controlling what AI models can produce, we can mitigate these risks and ensure that generative AI serves humanity in a positive, ethical way. Developers and companies need to implement clear guidelines and filters to detect and block harmful content from being generated. This ensures that AI remains a tool for good, and not a source of harm.

Legal and Regulatory Compliance

Another crucial aspect of controlling AI output is to ensure compliance with legal and regulatory frameworks. Different countries have different laws regarding the type of content that can be generated and shared. For example, in many jurisdictions, the creation and dissemination of fake news, defamatory content, or malicious deepfakes are illegal. Generative AI systems could easily be misused to generate content that violates these laws.

Deepfakes, which are hyper-realistic videos or images created by AI to impersonate individuals, have become a growing concern. These deepfakes can be used to spread false information, commit fraud, or even harass individuals. In 2018, a study by the University of Washington revealed that deepfake videos could easily manipulate public opinion and disrupt democratic processes, such as elections. Without proper control, these systems could undermine trust in media and cause significant legal and societal issues.

Regulatory bodies are starting to take action, and developers must comply with these regulations to avoid legal repercussions. By regulating the output of AI systems, companies can prevent the creation of content that could breach laws, protect individuals’ rights, and help maintain societal trust.

Preventing the Spread of Misinformation

Misinformation is one of the most insidious threats in the digital age, and generative AI can play a significant role in either spreading or mitigating it. AI can create convincing yet entirely false narratives, fake news stories, or doctored media that seem real but are entirely fabricated. The speed at which AI can generate this content is staggering, making it easy to disseminate fake news quickly.

Controlling the output of generative AI systems helps prevent the accidental or intentional creation of misleading information. AI systems can be trained to recognize patterns associated with disinformation and block the generation of content that fits those patterns. This can help combat the spread of hoaxes, conspiracy theories, and other types of harmful content. By maintaining control over AI output, developers can ensure that these systems are used to enhance truth and transparency rather than perpetuate falsehoods.

Moreover, generative AI can be an essential tool for fact-checking. AI systems that are trained to assess the accuracy of information can play a critical role in identifying and preventing misinformation from spreading in the first place. When combined with human oversight, these systems can be a valuable resource in maintaining the integrity of information across various platforms.

Safeguarding Privacy and Security

Generative AI systems have the ability to create content that closely resembles real people or events, which raises significant concerns about privacy and security. For example, AI-generated faces or voices could be used to impersonate individuals, creating a serious risk for identity theft, fraud, or other malicious activities. Additionally, AI systems might generate content that violates an individual’s privacy by exposing sensitive information or generating realistic but false representations of private moments.

In the case of synthetic media, there is a growing risk of malicious actors using AI to impersonate individuals for harmful purposes, such as blackmail or defamation. The use of AI to create fraudulent documents, fake interviews, or even illicit communications can also undermine the security of both individuals and organizations.

By controlling the output of generative AI systems, developers can prevent such abuses. Strong ethical guidelines, monitoring systems, and safeguards can be implemented to block the creation of content that violates privacy rights or threatens security. This ensures that generative AI remains a tool for innovation and creativity, rather than a weapon for malicious purposes.

Maintaining Human Creativity and Autonomy

While generative AI can assist with creativity, it is important to remember that human creativity and autonomy should remain at the core of our decision-making processes. AI-generated content should be seen as a tool to enhance human creativity, not replace it. If left unchecked, generative AI systems could lead to the over-reliance on AI-generated content, potentially stifling authentic human creativity.

By controlling the output of generative AI, we ensure that these systems are used responsibly to complement and enhance human ingenuity. For instance, artists, musicians, and writers can use AI tools to inspire their work or speed up their creative process, but they should remain in control of the final output. This balance between AI and human involvement will preserve the uniqueness and authenticity of human creativity while benefiting from the capabilities of generative AI.

Ensuring Accountability

Finally, controlling AI output is essential for ensuring accountability. When generative AI systems produce harmful or illegal content, it can be difficult to pinpoint who is responsible. Is it the developer, the user, or the AI itself? Without clear control mechanisms, there may be a lack of accountability for the consequences of AI-generated content.

By imposing strict regulations and controls on the output of generative AI systems, companies can ensure that there is always accountability for their use. Developers should implement systems for tracking and reviewing the output of AI models, ensuring transparency and traceability in the content generation process. This not only protects the users and society but also holds developers responsible for their creations.

Conclusion

Generative AI holds enormous potential to revolutionize industries and transform society in many positive ways. However, as with any powerful tool, its unchecked use can have significant negative consequences. By controlling the output of generative AI systems, we can mitigate risks related to ethics, legal issues, misinformation, privacy, security, creativity, and accountability. As we continue to advance in AI technology, it is essential that we implement strong controls to ensure these systems benefit humanity while minimizing their potential for harm. Only through responsible oversight and careful regulation can we harness the full power of generative AI in a way that promotes trust, safety, and innovation.